Sample Size, Kids and Bears, Oh My: Part Two

Sample Size and Representativeness

When it comes to how big a survey sample should be, a couple of factors come into play. In my last post, I talked about the importance of power in statistical significance tests. In sum: a bigger sample is usually better—there’s more of an opportunity to discern relationships.

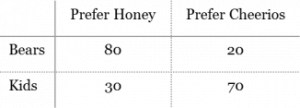

However, if we have a big sample but only a few respondents represent our target population, then we can’t derive much meaning from our results. Let’s revisit one of our examples from the previous post. We conducted a survey about food preferences with 100 bears and 100 kids:

How confident are we that these results represent the larger populations of American bears and kids? Can we generalize about the entire bear and kid populations in the US based on these results? What about the bears who prefer salmon over anything else, and the kids who prefer banana slices?

When we ask about the “generalizability” and “representativeness” (yep, those are real statistical terms) of our results, we’re actually asking this: Are we confident that we could consistently produce the same findings from surveying different groups of bears and kids? There are a couple of ways to try to increase that likelihood of this from the get-go, including:

- Calculating the margin of error, which tells us how many respondents we should aim for in a sample. The more respondents, the more likely they are to represent—that is, lead to accurate findings about—our target population. This is the +% you often see on survey or poll results, and +5% is the standard often used. Just know that a smaller margin of error requires a larger sample size.

- Statistical sampling, which is a way of selecting respondents from subsets of the target population that are proportional to their actual population. However, this can be a costly, time-intensive process.

So what if we’re not able to hit either of these ideal sweet spots?

One alternative is to use descriptive analyses to indicate how similar—or different—our survey respondents are to the population we’re studying. Once we’ve gathered our data, respondents’ demographic characteristics can inform how we frame our results. Going back to our example, perhaps our demographic data on our respondents indicates that most of our bear respondents were from California and our kid respondents from Minnesota. With this information, we can frame our findings accordingly: Black bears from California and kids were from Minnesota prefer different foods. We can also use this technique during data collection to do more targeted follow-up if we notice a particular subset of respondents are not completing the survey. So, even though small sample size limits our ability to make a big, sweeping, generalized statement about the population, we can still derive meaningful, nuanced data from the respondents we do have.

We can’t make people take surveys to fatten up our sample size, and we also can’t hand-pick exactly who takes them. As evaluators, we know there are always several factors that are beyond our control, and sample size is very often one of them.

What we’ve discovered during this blog series is that getting meaningful results from surveys is less about how big the sample is and more about the extent to which it reflects the population we’re interested in evaluating. Controlling for sample size is a luxury; sometimes, it makes more sense to put the limited resources toward analyzing the responses we have. Rather than spending time tracking down more respondents to increase sample size, we can spend that time understanding our population and the data we have. We can then frame our findings in a way that accurately brings that data to life and enriches our audiences’ understanding.